I am a Ph.D. student in Computer Science at Stanford University, working on NLP and ML.

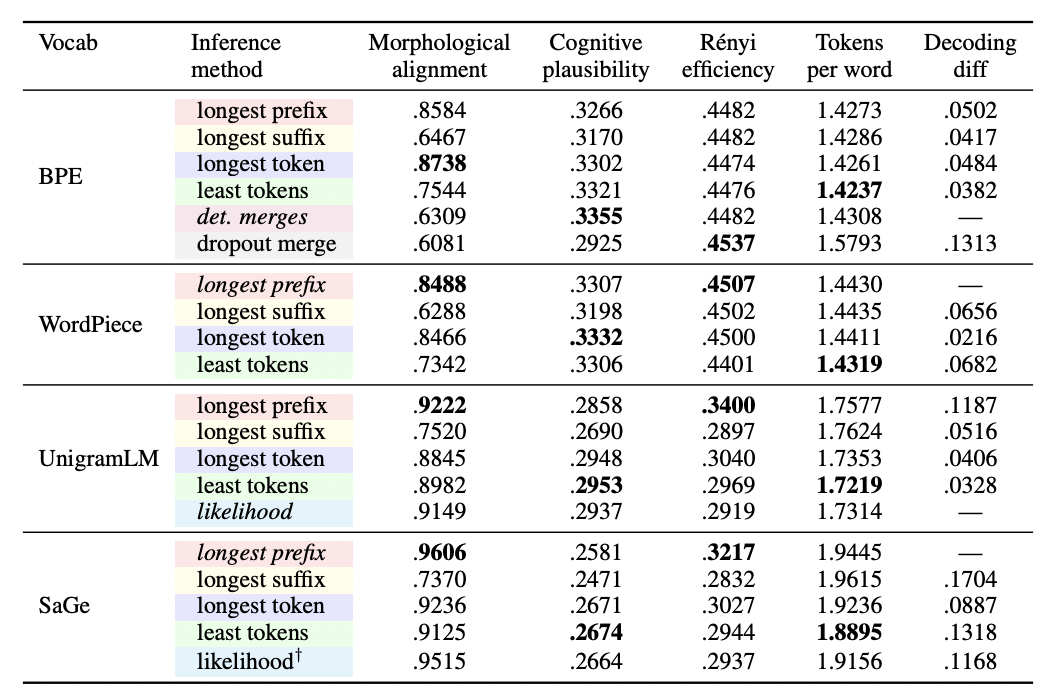

Before Stanford, I was working as an engineer at Meta.I completed my B.Sc. and M.Sc. in Computer Science at Ben-Gurion University, where I worked on evaluation of tokenization algorithms for language models.

Feel free to reach out!